Summary

Spatial control is a core capability in controllable image generation, and aims to generate images that follow the spatial input configurations. Advancements in layout-to-image generation have shown promising results on in-distribution (ID) datasets with similar spatial configurations. However, it is unclear how these models perform when facing out-of-distribution (OOD) samples with arbitrary, unseen layouts.

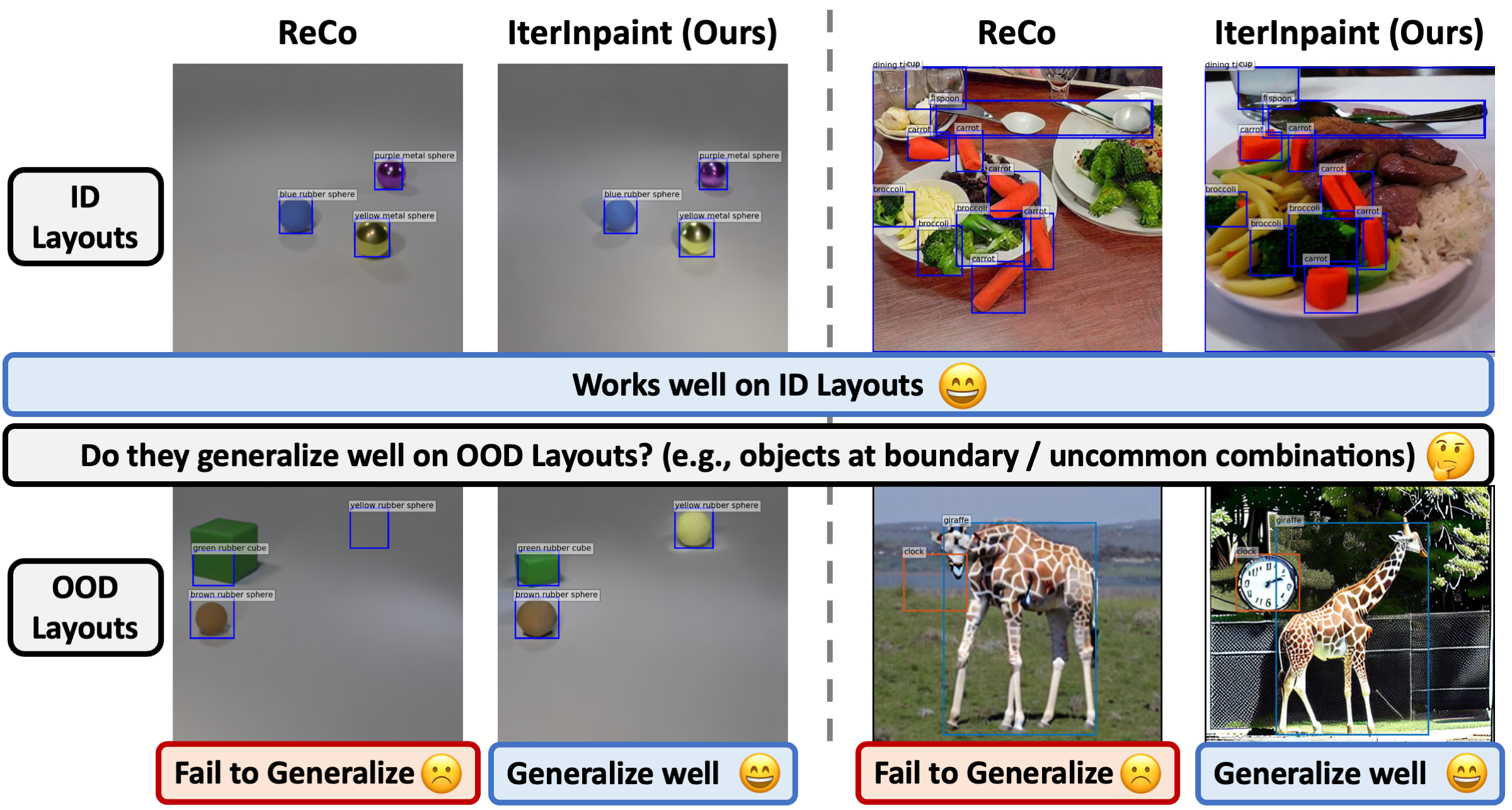

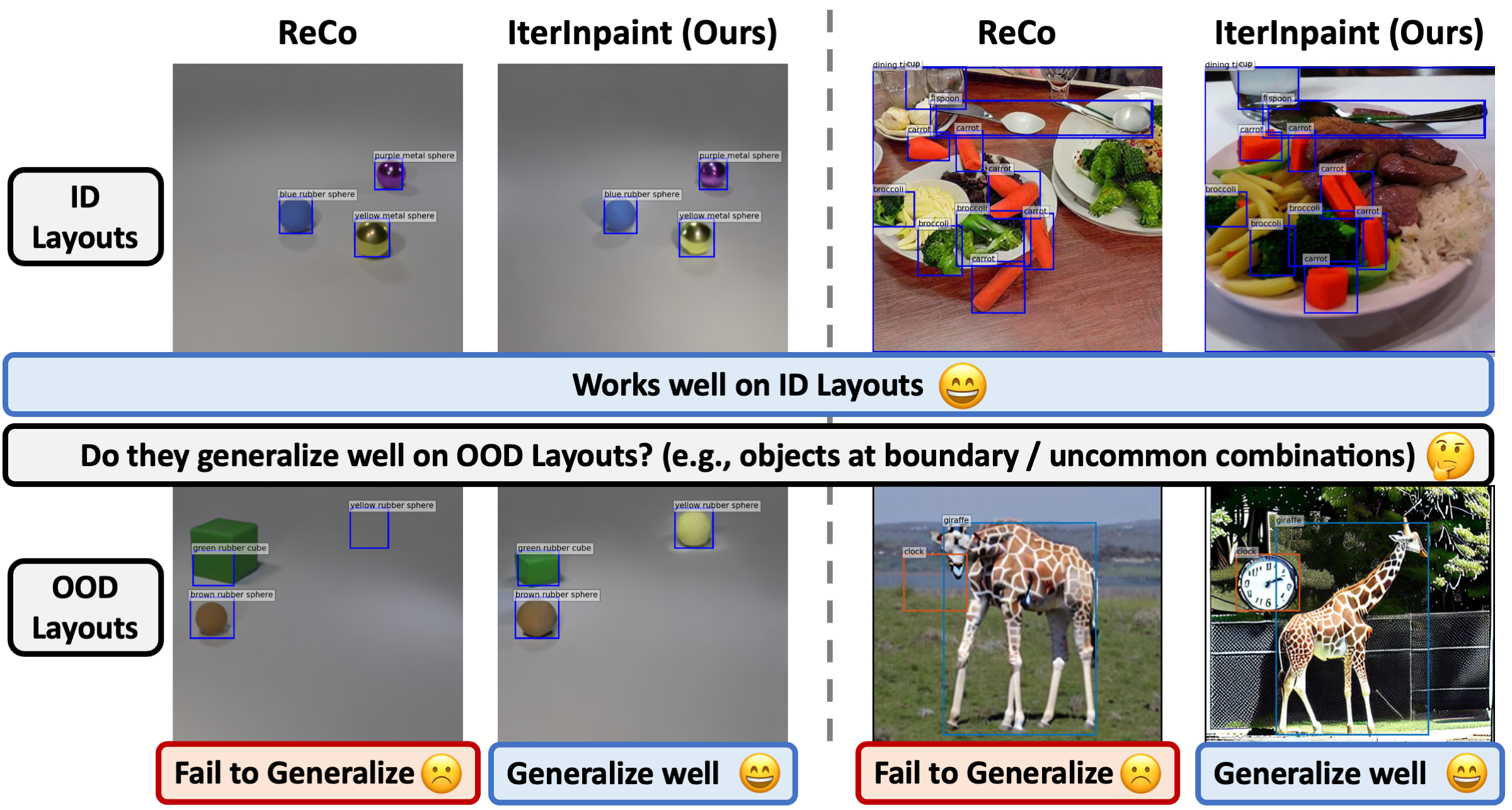

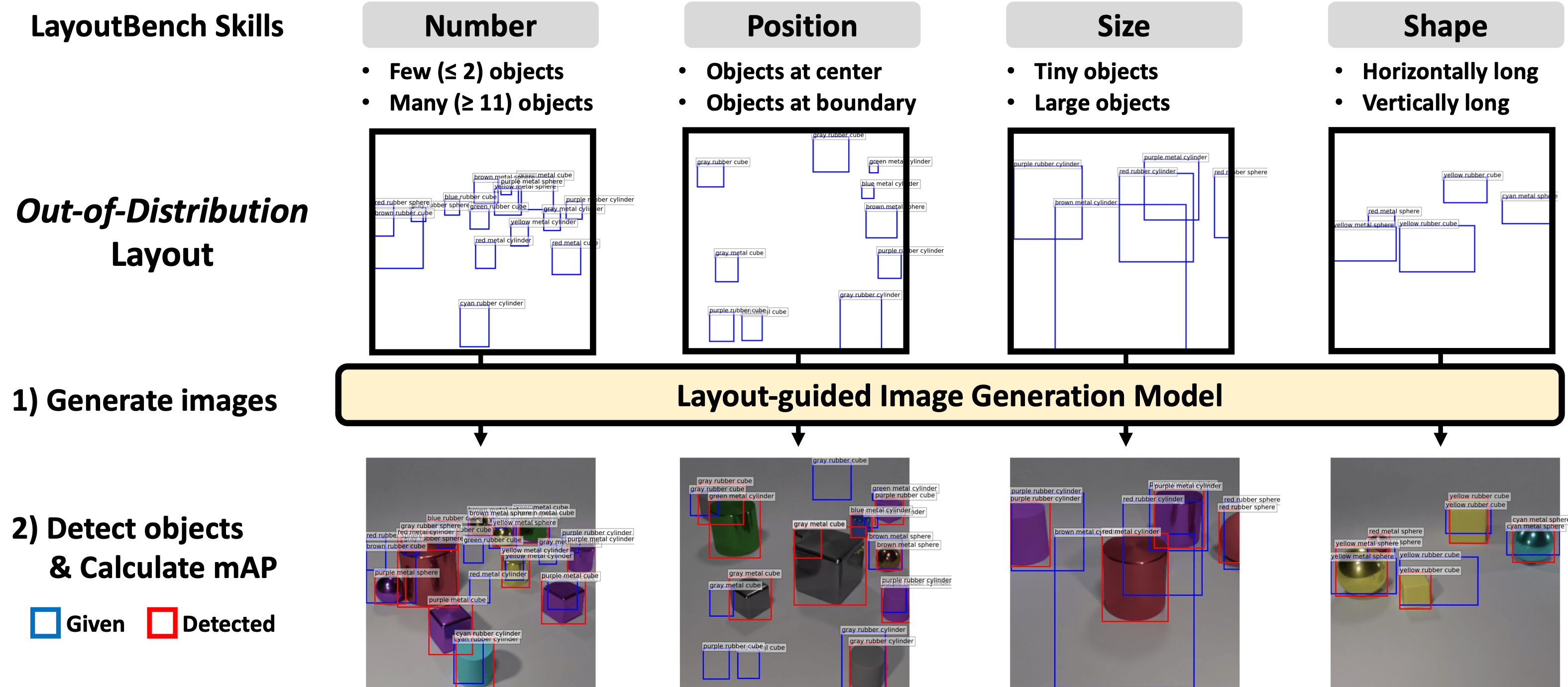

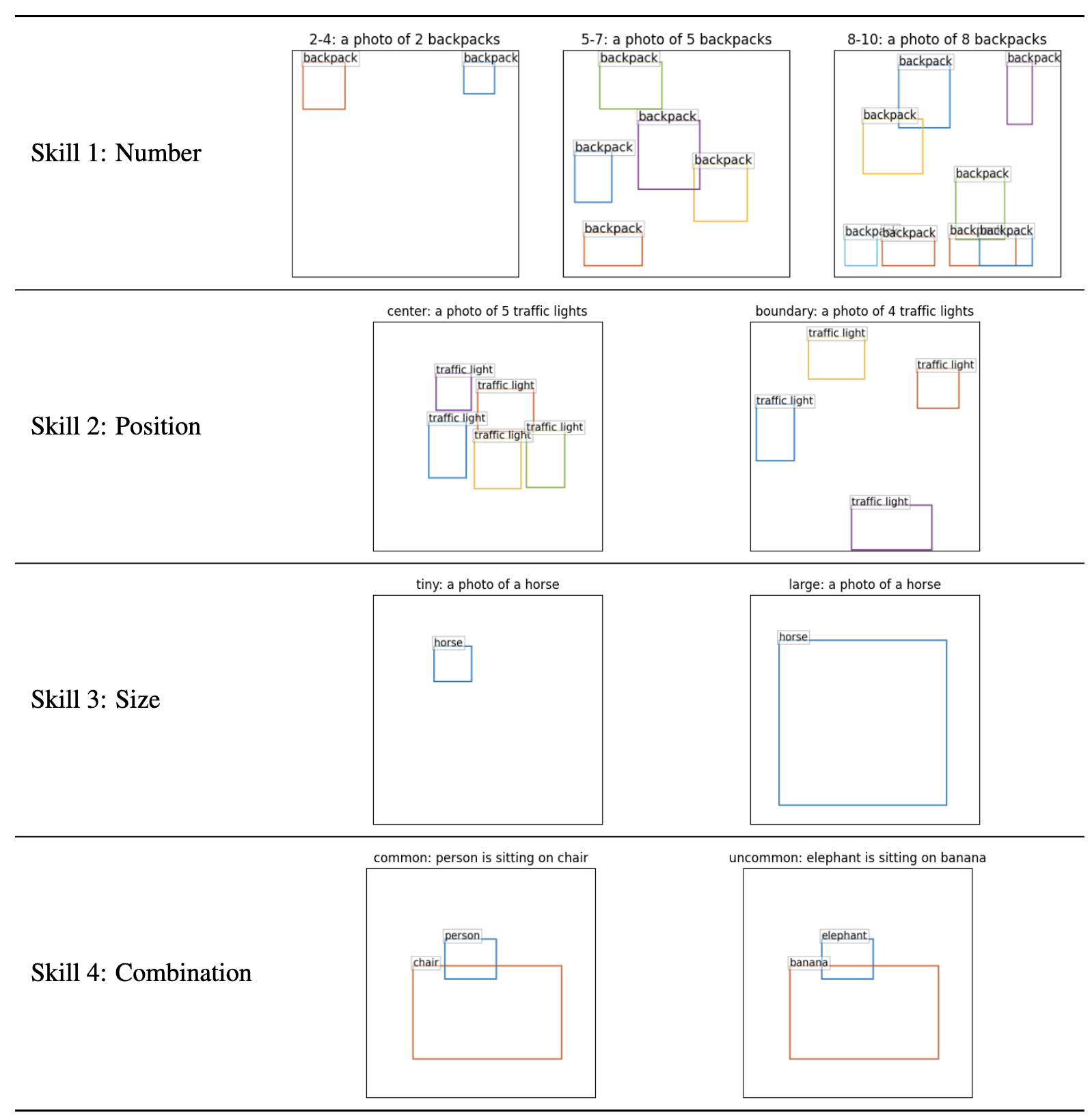

In this paper, we propose LayoutBench, a diagnostic benchmark that examines four categories of spatial control skills: number, position, size, and shape. We benchmark two recent representative layout-guided image generation methods and observe that the good ID layout control may not generalize well to arbitrary layouts in the wild (e.g., objects at boundary).

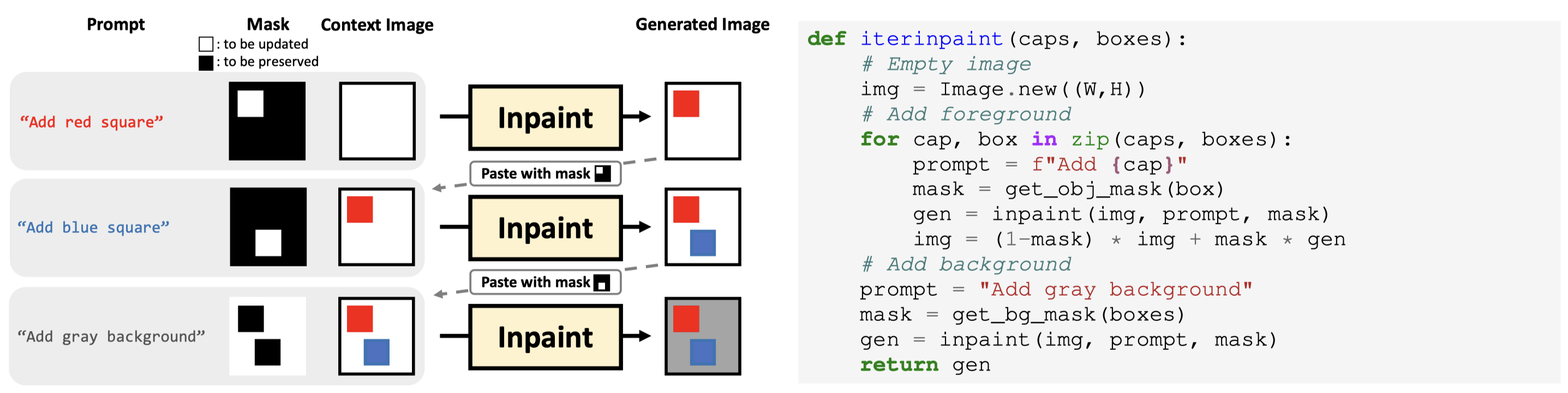

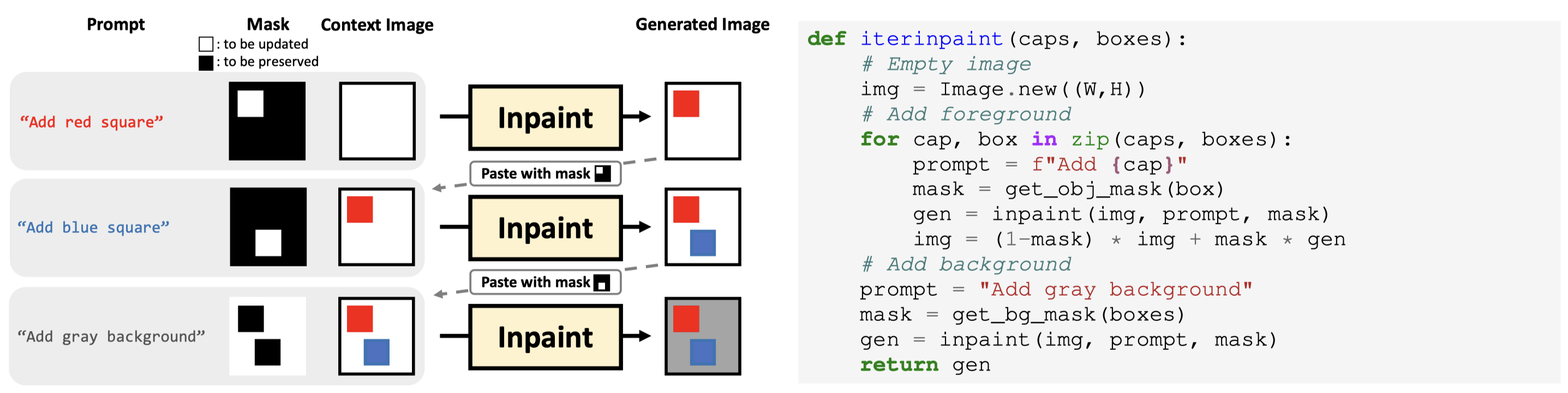

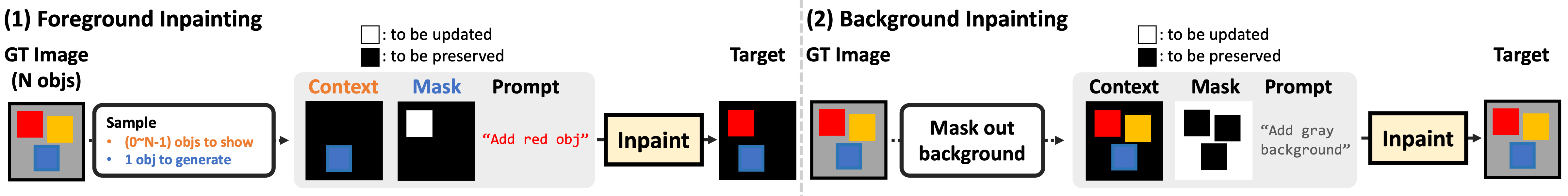

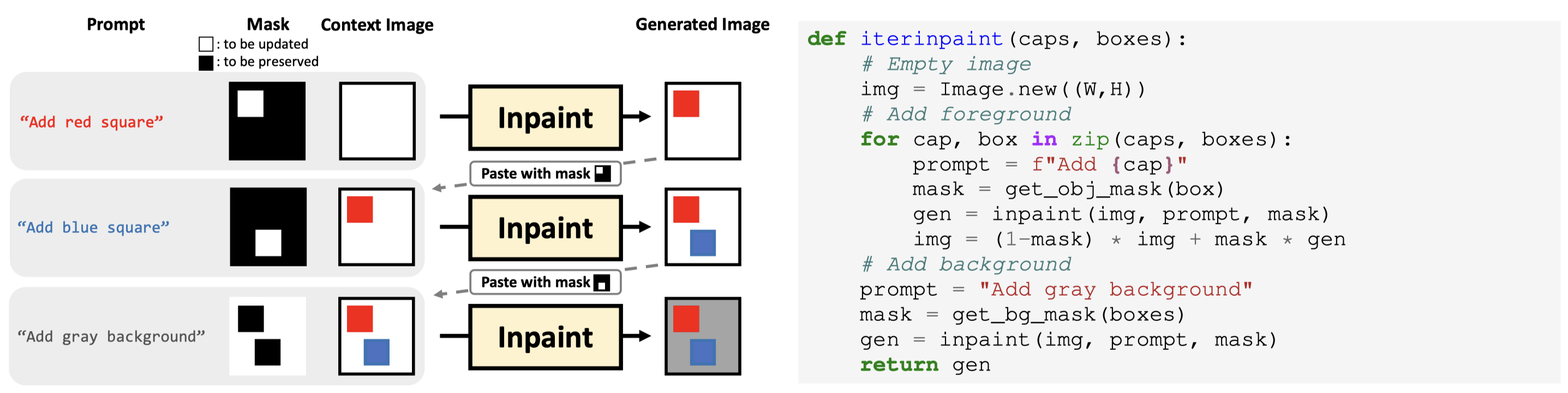

Next, we propose IterInpaint, a new baseline that generates foreground and background regions in a step-by-step manner via inpainting, demonstrating stronger generalizability than existing models on OOD layouts in LayoutBench.

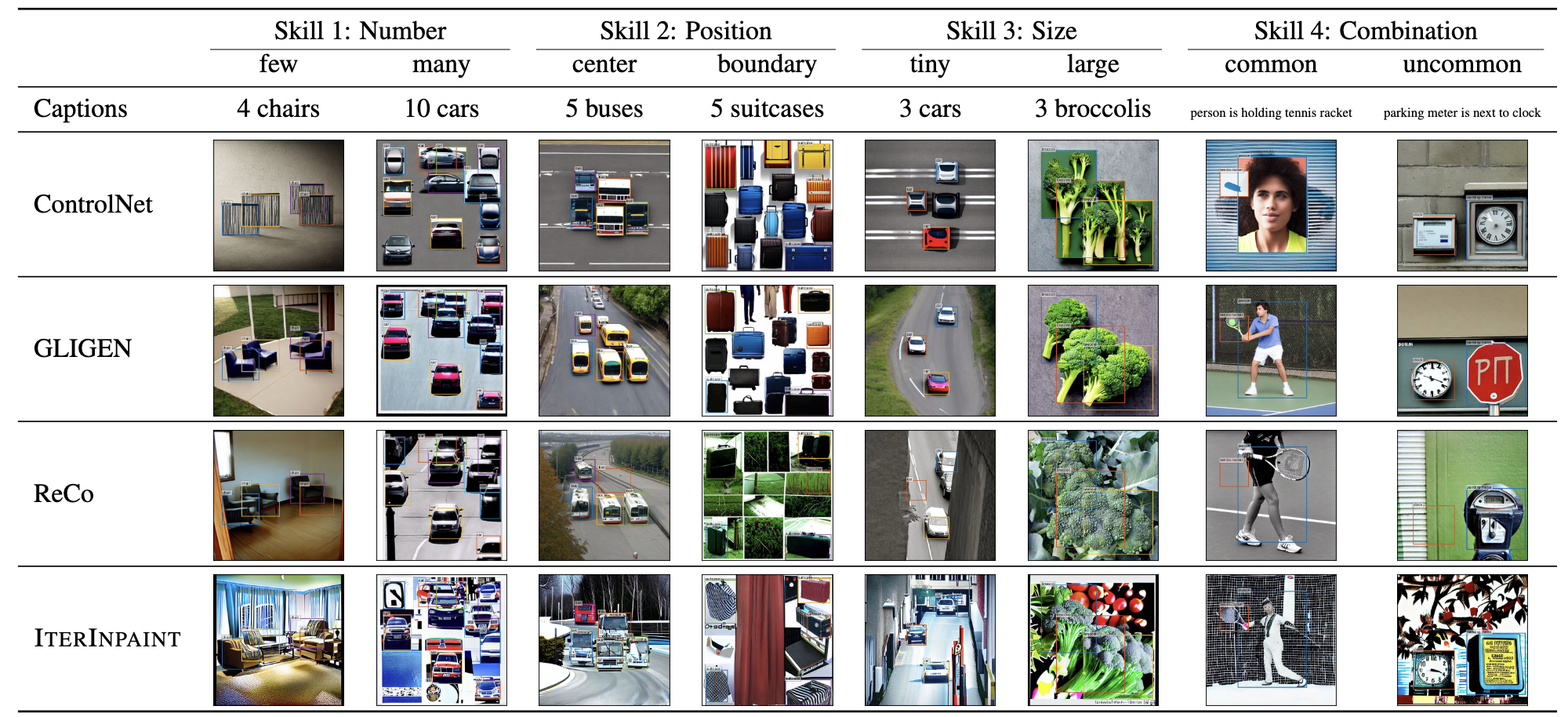

We perform quantitative and qualitative evaluation and fine-grained analysis on the four LayoutBench skills to pinpoint the weaknesses of existing models. Lastly, we show comprehensive ablation studies on IterInpaint, including training task ratio, crop&paste vs. repaint, and generation order.

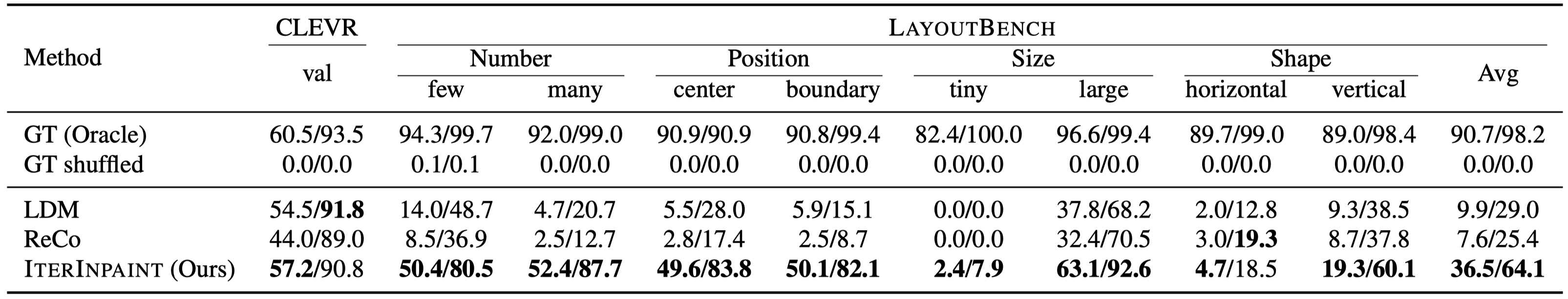

The first row shows the layout accuracy based on GT images. Our object detector can achieve high accuracy on both CLEVR and LayoutBench datasets, showing the high reliability. The second row (GT shuffled) shows a setting where a given target layout, we randomly sample an image from the GT images to be the generated image. The 0% AP on both CLEVR and LayoutBench means that it is impossible to obtain high AP by only generating high-fidlity images but in the wrong layouts.

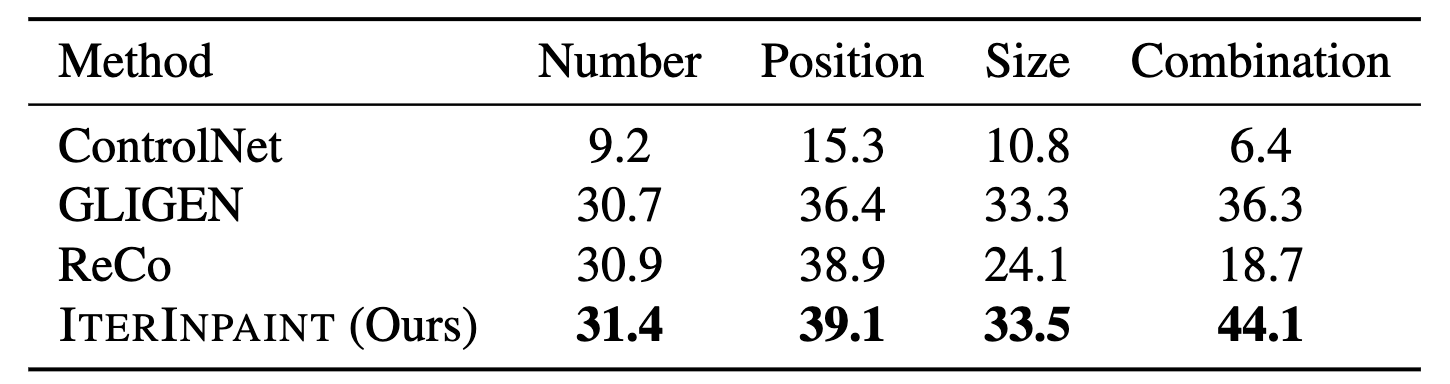

As shown in the bottom half of the table, while all three models achieve high layout accuracy on CLEVR, the layout accuracy drop by large marigns on LayoutBench, showing the ID-OOD layout gap. Specifically, LDM and ReCo fail substantially on LayoutBench across all skill splits, with an average drop of 57~70% per skill on AP50, compared to the high AP on in-domain CLEVR validation split. In contrast, IterInpaint generalize better to OOD layouts in LayoutBench, while maintining or even slightly improving layout accuracy on ID layouts in CLEVR.

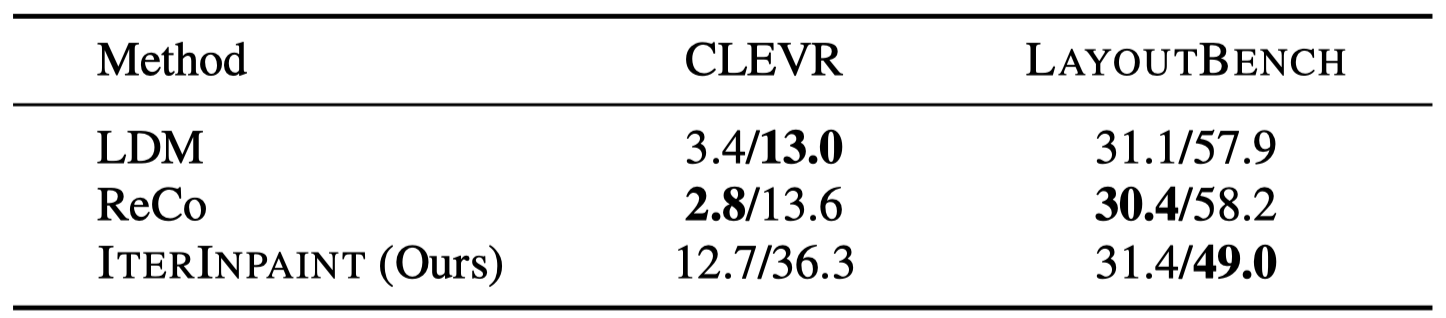

Layout accuracy in AP/AP50(%) on CLEVR and LayoutBench. Best (highest) values are bolded.

Layout accuracy in AP/AP50(%) on CLEVR and LayoutBench. Best (highest) values are bolded.

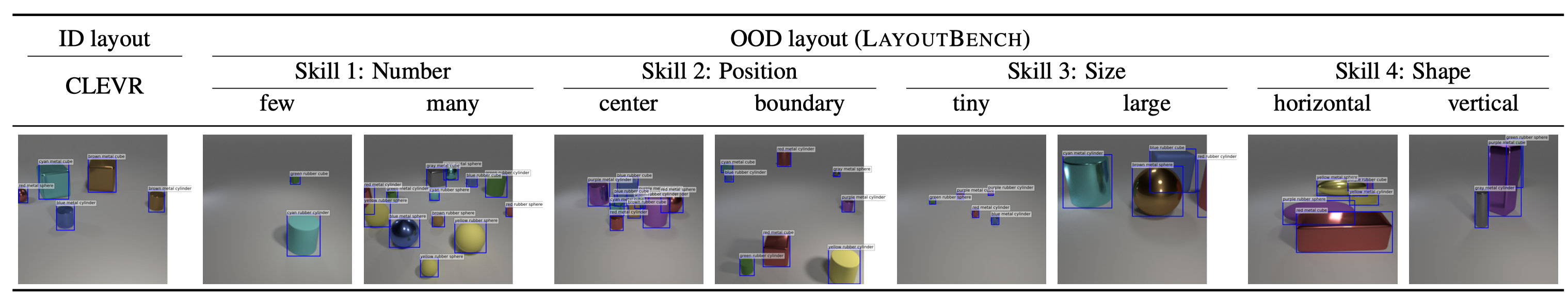

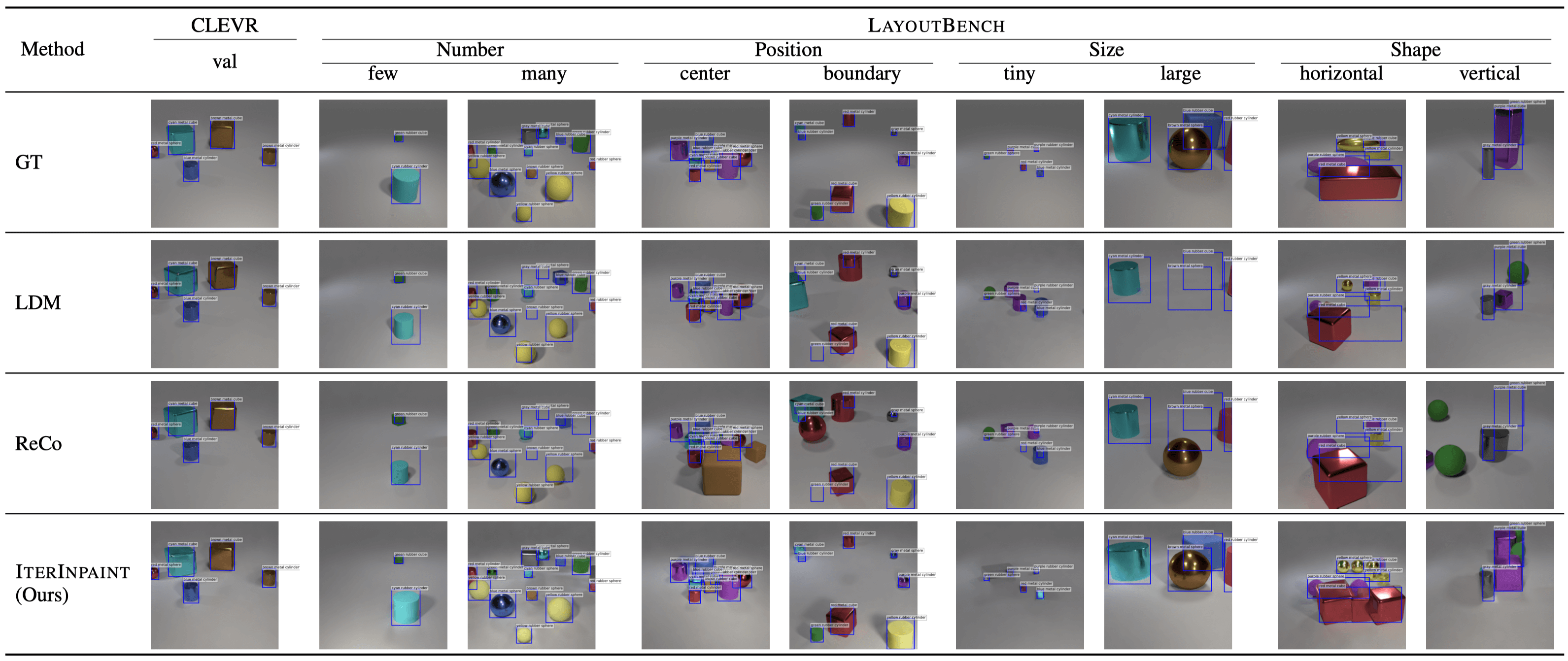

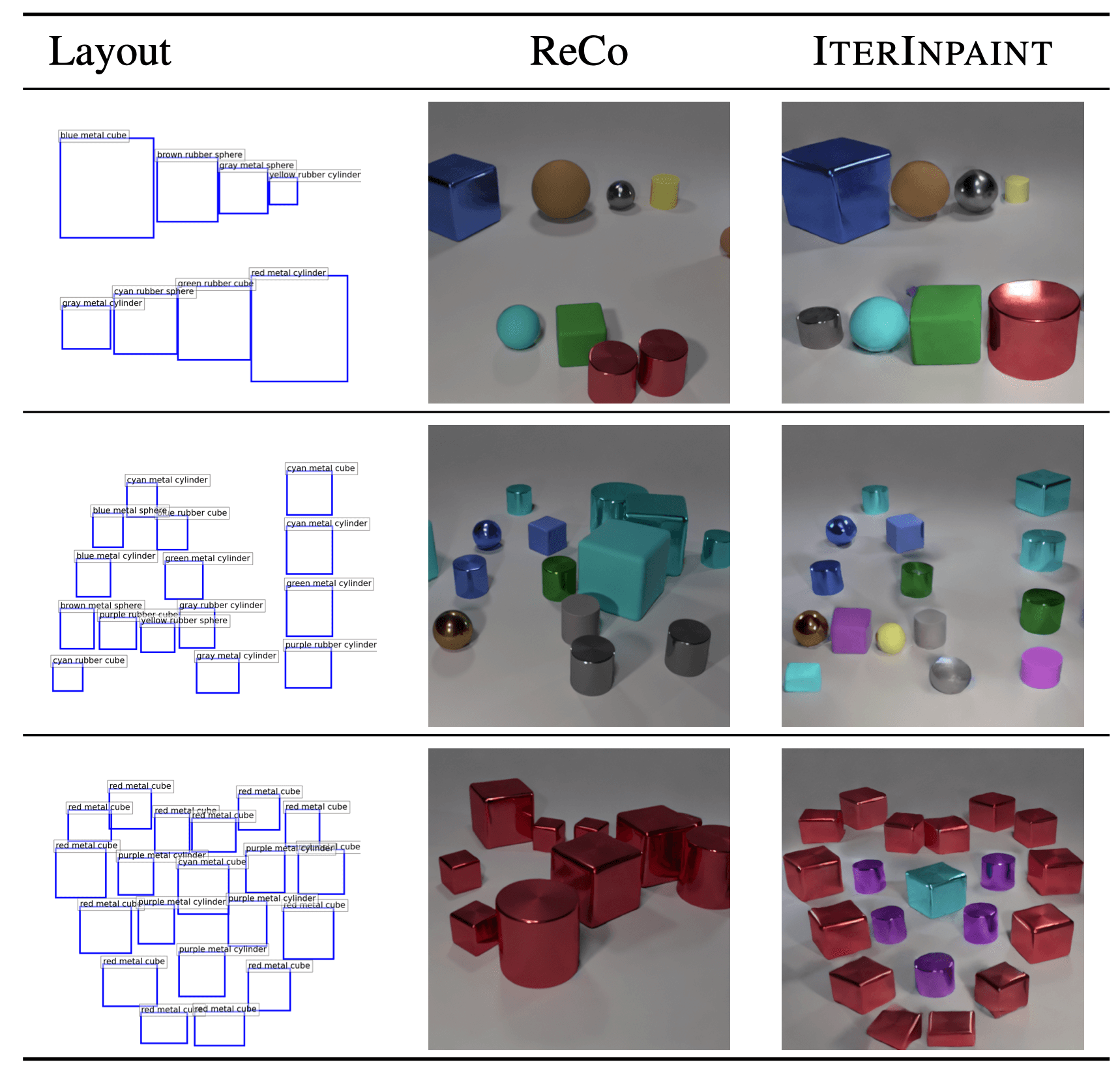

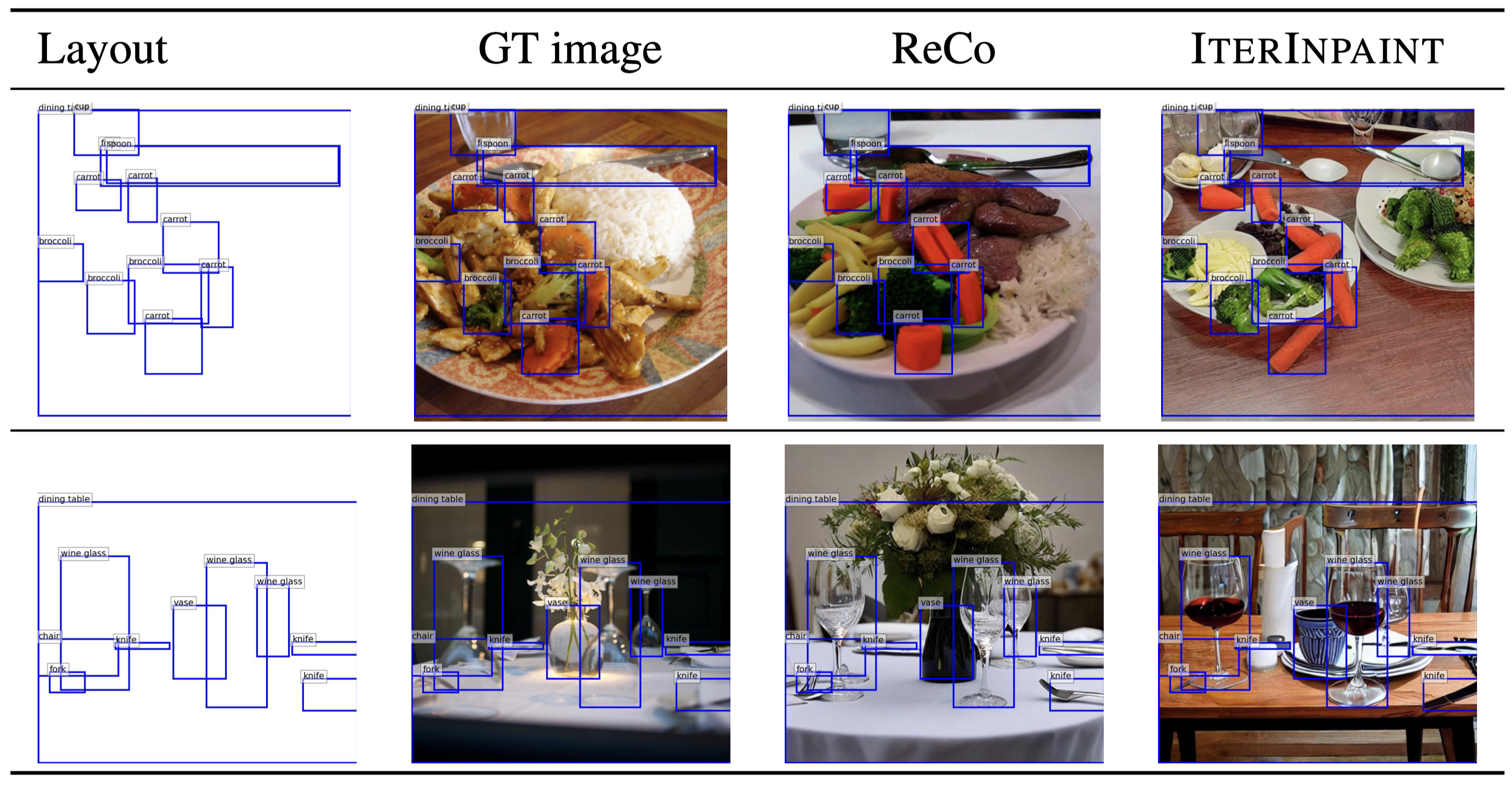

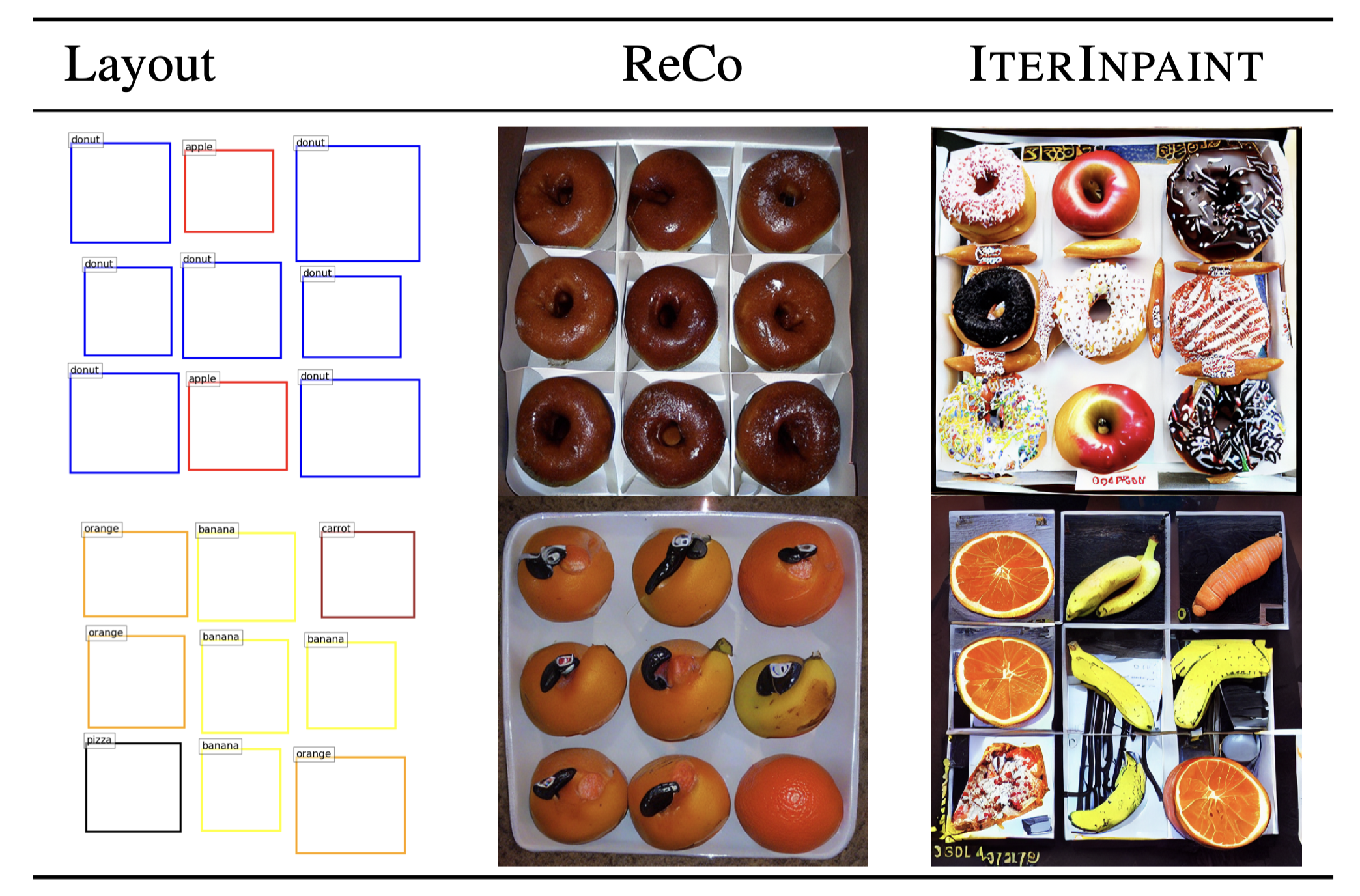

Comparison of generated images on CLEVR (ID) and LayoutBench (OOD). GT boxes are shown in blue.

Comparison of generated images on CLEVR (ID) and LayoutBench (OOD). GT boxes are shown in blue.

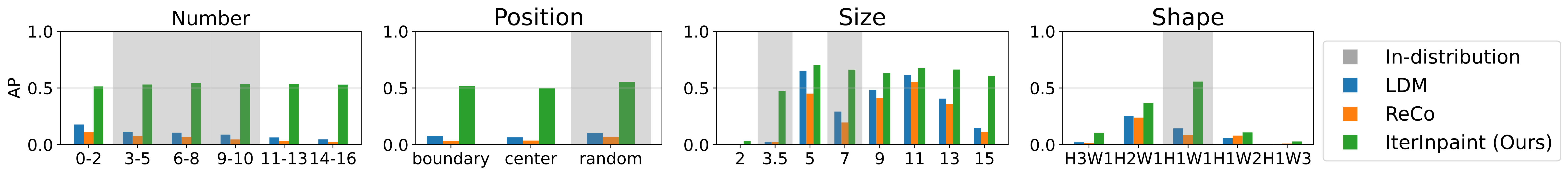

Detailed layout accuracy analysis with fine-grained splits of 4 LayoutBench skills.

In-distribution (same attributes to CLEVR) splits are colored in gray,

For the Shape skill, the splits are named after their height/width ratio (e.g. H2W1

split consists of the objects with a 2:1 ratio of height:width).

Detailed layout accuracy analysis with fine-grained splits of 4 LayoutBench skills.

In-distribution (same attributes to CLEVR) splits are colored in gray,

For the Shape skill, the splits are named after their height/width ratio (e.g. H2W1

split consists of the objects with a 2:1 ratio of height:width).

We show three input layouts: (1) two rows of objects with different sizes, (2) ‘AI’ written in the text, and (3) a heart shape. While ReCo often fails to ignore or misplace some objects, IterInpaint places objects significantly more accurately. We show the generation process of these images in the bottom.

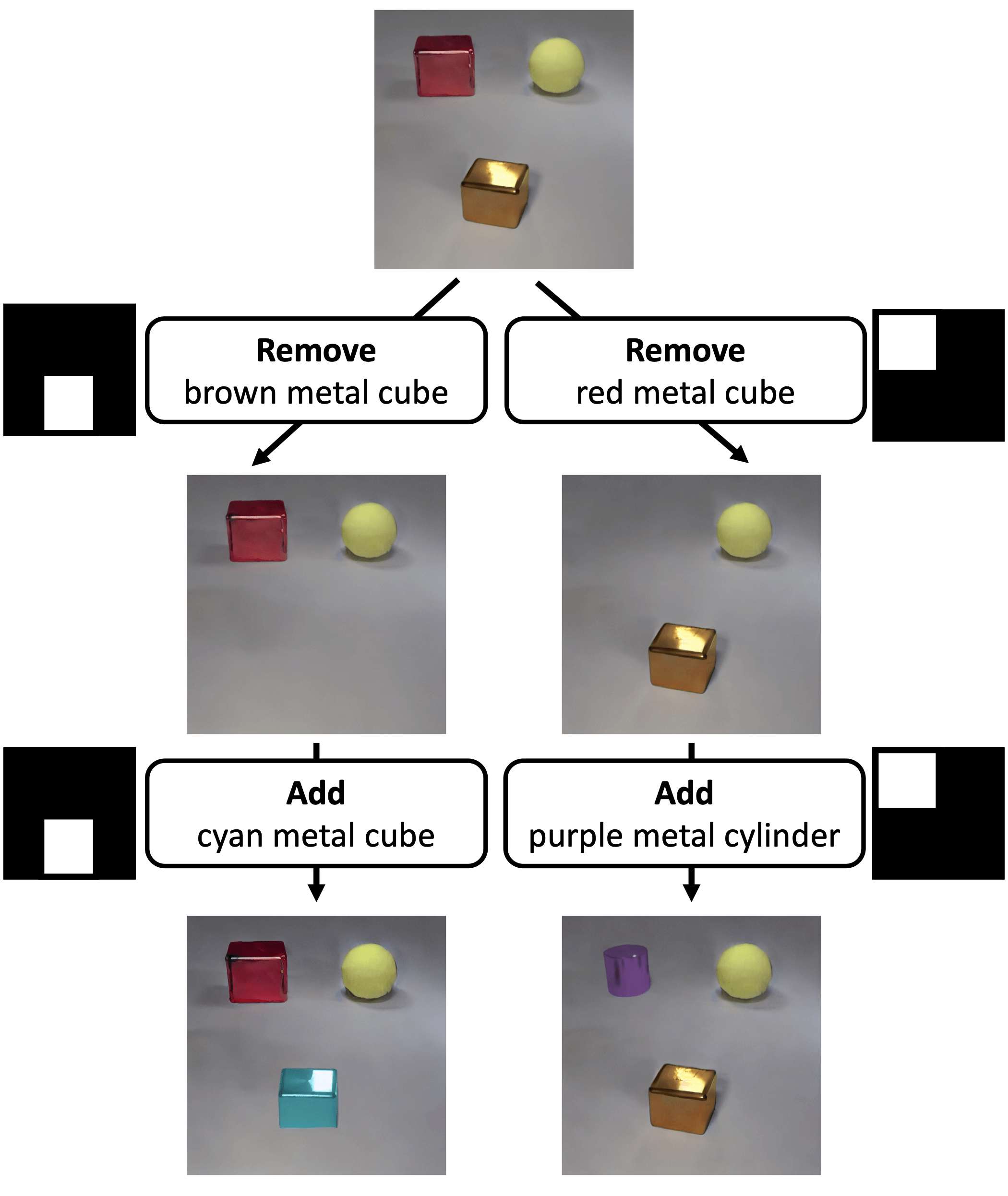

With IterInpaint, users can interatively manipulate images with a binary mask and a text prompt, to add or remove objects at arbitrary locations.

@inproceedings{Cho2024LayoutBench,

author = {Jaemin Cho and Linjie Li and Zhengyuan Yang and Zhe Gan and Lijuan Wang and Mohit Bansal},

title = {Diagnostic Benchmark and Iterative Inpainting for Layout-Guided Image Generation},

booktitle = {The First Workshop on the Evaluation of Generative Foundation Models},

year = {2024},

}